Hello,

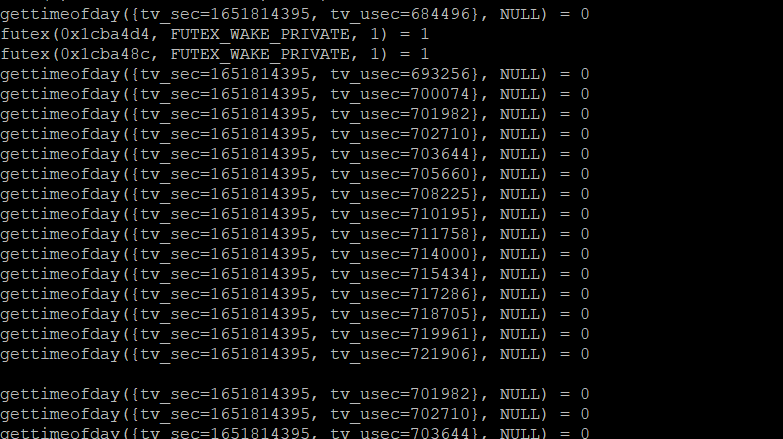

So I have a problem. I am making data accumulation for graph. Data is received from modbus communication. Problems occurred when I tried to accumulate data. Random CPU spikes (95-98%) Does someone has any suggestion how to solve a problem ?

Hardware - beaglebone green gateway

Also I removed comments from nano/var/lib/node-red/.node-red/settings.js

contextStorage: {

default: {

module:"localfilesystem"

},

},

Code for data accumulation

[

{

"id": "e2eb84e67135a1d1",

"type": "tab",

"label": "Flow 1",

"disabled": false,

"info": "",

"env": []

},

{

"id": "36994b8b41eac99e",

"type": "function",

"z": "e2eb84e67135a1d1",

"name": "Data",

"func": "const data = global.get(\"ALLDATA\");\n\nmsg.payload = {\t\n\"Timestamp\": new Date().getTime(),\n\"R5004\": Number.parseFloat(data.R5004.toFixed(2)),\n\"R5007\": Number.parseFloat(data.R5007.toFixed(2)),\n\"R5012\": Number.parseFloat(data.R5012.toFixed(2)),\n\"R5014\": Number.parseFloat(data.R5014.toFixed(2)),\n\"R5020\": Number.parseFloat(data.R5020.toFixed(2)),\n\"R5021\": Number.parseFloat(data.R5021.toFixed(2)),\n\"R5022\": Number.parseFloat(data.R5022.toFixed(2)),\n\"R5023\": Number.parseFloat(data.R5023.toFixed(2)),\n\"R5024\": Number.parseFloat(data.R5024.toFixed(2)),\n\"R5025\": Number.parseFloat(data.R5025.toFixed(2)),\n\"R5026\": Number.parseFloat(data.R5026.toFixed(2)),\n\"R5028\": Number.parseFloat(data.R5028.toFixed(2)),\n\"R5029\": Number.parseFloat(data.R5029.toFixed(2)),\n\"R5030\": Number.parseFloat(data.R5030.toFixed(2)),\n\"R5031\": Number.parseFloat(data.R5031.toFixed(2)),\n\"R5032\": Number.parseFloat(data.R5032.toFixed(2)),\n\"R5033\": Number.parseFloat(data.R5033.toFixed(2)),\n\"R5034\": Number.parseFloat(data.R5034.toFixed(2)),\n\"R5035\": Number.parseFloat(data.R5035.toFixed(2)),\n\"R5037\": Number.parseFloat(data.R5037.toFixed(2)),\n\"R5038\": Number.parseFloat(data.R5038.toFixed(2)),\n\"R5039\": Number.parseFloat(data.R5039.toFixed(2)),\n\"R5044\": Number.parseFloat(data.R5044.toFixed(2)),\n\"R5045\": Number.parseFloat(data.R5045.toFixed(2)),\n};\n\nreturn msg;",

"outputs": 1,

"noerr": 0,

"initialize": "",

"finalize": "",

"libs": [],

"x": 590,

"y": 540,

"wires": [

[

"ca9d2154a7fcb14d"

]

]

},

{

"id": "f17d37076ba69816",

"type": "inject",

"z": "e2eb84e67135a1d1",

"name": "",

"props": [

{

"p": "payload"

},

{

"p": "topic",

"vt": "str"

}

],

"repeat": "1",

"crontab": "",

"once": false,

"onceDelay": 0.1,

"topic": "",

"payload": "",

"payloadType": "date",

"x": 430,

"y": 540,

"wires": [

[

"36994b8b41eac99e"

]

]

},

{

"id": "ca9d2154a7fcb14d",

"type": "function",

"z": "e2eb84e67135a1d1",

"name": "",

"func": "const measures = context.get(\"DATA\") || [];\n\n\n\nif(measures.length >= 86400) {\n\n measures.pop();\n\n}\n\n\n\nmeasures.unshift(msg.payload);\n\ncontext.set(\"DATA\", measures);\n\nmsg.payload = measures;\n\nreturn msg;",

"outputs": 1,

"noerr": 0,

"initialize": "",

"finalize": "",

"libs": [],

"x": 760,

"y": 540,

"wires": [

[]

]

}

]

flows.json (3.1 KB)