Hi folks,

Finally I did buy some decent camera's...

Handling the RTSP stream - from a cam that sends H.264 for video and AAC for audio - works like a charm, since no re-encoding is required. Almost no cpu usage!

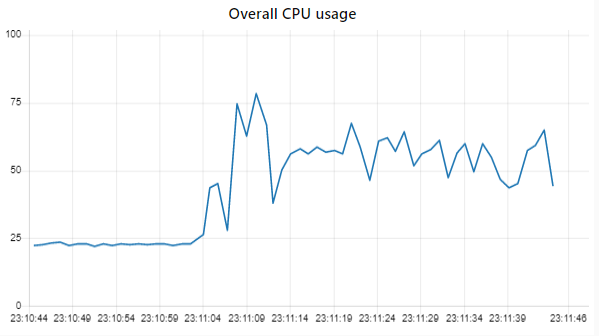

But my troubles started when trying to extract the I-frames from my stream, for image processing. Our ffmpeg guru @kevinGodell already described here very clearly that HIGH CPU usage is normal for encoding jpegs (since no hardware acceleration is applicable). But I am quite flabbergasted about the amount of cpu being used for only 1 image per second on a Raspberry Pi 4:

Very weird because I-frames are already complete image frames, so I am very surprised that it still consumes such a large amount of cpu... Therefore I am still hoping that I do something wrong with my ffmpeg parameters, and that things can be improved. This is my command:

[

"-loglevel",

"+level+fatal",

"-nostats",

"-rtsp_transport",

"tcp",

"-i",

"rtsp://my_cams_rtsp_stream",

"-f",

"mp4",

"-c:v",

"copy",

"-c:a",

"copy",

"-movflags",

"+frag_keyframe+empty_moov+default_base_moof",

"pipe:1",

"-progress",

"pipe:3",

"-f",

"image2pipe",

"-vf",

"select='eq(pict_type,PICT_TYPE_I)'",

"-vsync",

"vfr",

"pipe:4"

]

But I could not found decent information about image2pipe. If I understand it correctly, image2pipe will extract only the I-frames from the stream, based on the PICT_TYPE_I argument.

Not sure where all the cpu is being eaten:

-

Does it take lots of cpu time to find the I-frame in the stream for some reason?

-

Does it take lots of cpu time to encode the I-frame to a jpeg image?

To determine this, I tried this tip from StackOverflow, to use uncompressed BMP images. Such BMP's are not useful for me, but it is a way to do a root cause analysis. However when I try to add

-c:v bmp" or "-c:v rawvideo", I had expected that the cpu usage would drop because no jpeg encoding would be required. But it doesn't solve anything? -

Does it perhaps select too much frames, so a lot of extra unnecessary encoding is required?

I don't think this is the problem because my camera has a framerate of 1 frame/sec, and when I add a node-red-contrib-msg-speed node at the output then that one also indicates about 1 second per minut.

I have run completely out of ideas. If anybody has tips, all help is appreciated!!!!

P.S. I am aware that I could lower the cpu by e.g. adjusting the frame rate, resolution, ... on the ip camera web interface. But I would like to understand why the performance is so horrible with my current settings.

Thanks!!

Bart