Hi everyone,

I am sending a bunch of MQTT messages using Node-RED and would like to make sure that each new batch is delayed until the previous batch is finished.

I tried with a manual delay

msg.payload = "";

msg.topic = "";

msg.selectRange = "Ax:Ay";

// Output to output 1

msg.setting = "ip";

node.send([msg, null, null, null, null, null, null, null, null, null]);

// Delay and output to output 2

setTimeout(() => {

msg.setting = "template";

node.send([null, msg, null, null, null, null, null, null, null]);

}, 10000); // Delay in milliseconds (10 seconds)

// Delay and output to output 3

setTimeout(() => {

msg.setting = "settings";

node.send([null, null, msg, null, null, null, null, null]);

}, 20000); // Delay in milliseconds (20 seconds)

// Delay and output to output 4

setTimeout(() => {

msg.setting = "timers";

node.send([null, null, null, msg, null, null, null, null]);

}, 30000); // Delay in milliseconds (30 seconds)

// Delay and output to output 5

setTimeout(() => {

msg.setting = "rule1";

node.send([null, null, null, null, msg, null, null, null]);

}, 35000); // Delay in milliseconds (40 seconds)

// Delay and output to output 6

setTimeout(() => {

msg.setting = "rule2";

node.send([null, null, null, null, null, msg, null, null]);

}, 40000); // Delay in milliseconds (50 seconds)

// Delay and output to output 7

setTimeout(() => {

msg.setting = "rule3";

node.send([null, null, null, null, null, null, msg, null]);

}, 45000); // Delay in milliseconds (60 seconds)

// Delay and output to output 8

setTimeout(() => {

msg.setting = "rule_commands";

node.send([null, null, null, null, null, null, null, msg]);

}, 50000); // Delay in milliseconds (70 seconds)

// Delay and output to output 9

setTimeout(() => {

msg.setting = "custom";

node.send([null, null, null, null, null, null, null, null, msg]);

}, 55000); // Delay in milliseconds (80 seconds)

// // Delay and output to output 10

// setTimeout(() => {

// msg.setting = "custom";

// node.send([null, null, null, null, null, null, null, null, null, msg]);

// }, 65000); // Delay in milliseconds (90 seconds)

But depending on how many steps there are to process during each batch, this delay can be too long (wasting time) or too short (recipient might not be listening).

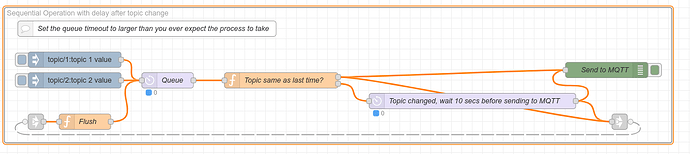

Is it possible to somehow delay unitl 5 seconds after the last debug message was observed? So if there are no new debug messages for 5 seconds, send the next msg to the next output?

Thank you all

Alex