Hi Node-RED community,

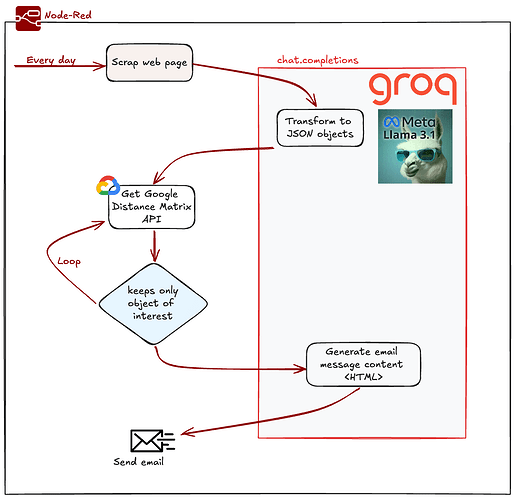

I wanted to share a workflow I’ve been working on that integrates Node-RED with LLaMA 3.1 (via Groq) and the Google Distance Matrix API. The goal is to automate the process of web scraping, data transformation, and sending personalized HTML emails based on specific criteria. Below is a detailed breakdown of the workflow:

![]()

** ![]() Click here to see the flow

Click here to see the flow ![]() **

**

.

Workflow Overview:

- Web Scraping:

• The flow begins with a scheduled trigger every Saturday at 7:00 AM. It scrapes a webpage and extracts the body text.

- Data Cleaning and Transformation:

• The scraped HTML content is cleaned and transformed into JSON objects. This is done using a LLM (LLaMA 3.1 via Groq), which formats the raw data into structured JSON.

- Distance Calculation:

• The JSON objects are looped through, and for each object, the address is set, and parameters are configured for the Google Distance Matrix API.

• The API calculates the distance for each object, and only those objects that meet specific criteria are retained.

- HTML Email Generation:

• Once the relevant objects are filtered, the flow generates an HTML email content using LLaMA 3.1 again and sends the email via a configured SMTP node.

- Error Handling:

• The flow includes error handling logic to catch issues related to the Google API and notify via a connected Home Assistant instance if something goes wrong.

Key Components:

• LLaMA 3.1: Used twice in the flow, once for transforming the scraped data into JSON, and again for generating the HTML email content.

• Google Distance Matrix API: Responsible for calculating distances, helping filter objects based on geographic relevance.

• Node-RED: Orchestrating the entire process, from scraping to email dispatch, with clean error handling.

This flow demonstrates how Node-RED can be leveraged to create sophisticated workflows that combine external APIs, advanced AI models, and robust automation features.

Feel free to ask any questions or suggest improvements! I’m looking forward to your feedback.

Best,

Joel