[This was posted in another old thread but buried in the responses.]

I've released a version 2.0.0-alpha of the Google Action node - most simple conversation types work but not the more complex ones. You can install it using:

npm install node-red-contrib-google-action@alpha

Working with the Google Assistant backend API is really painful - it's one of those API's that has been thrown together to support a variety of disparate user interface technology for a range of applications that haven't been fully designed as yet. It's really experimental.

This version of the Google Action node moves away from using the Actions SDK provided by Google because it was simply too incompatible with the Node Red framework - the Actions 2.0 SDK is written to act as a framework for an app in Node and smashing the two frameworks together was nasty.

Instead of using the Actions SDK, this version uses the Web hooks provided by Google. This means that there is a lot more flexibility available to you to implement your conversation flow in Node Red.

Note that this doesn't support Dialogflow projects because a) the dialog flow can be easily implemented in Node Red, b) there wasn't much to be gained from Dialogflow for apps with one or two users (Dialogflow uses machine learning to understand semantics of what people are saying, which requires lots of people to use the app), and c) Dialogflow won't accept self-signed SSL certificates which makes it difficult for hobbyist and experimental developers.

There are three nodes in the Google Action package - start , ask , and tell .

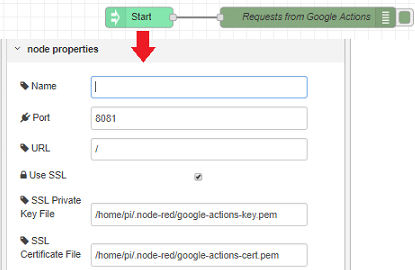

start is the starting point for a new conversation. It listens for new conversation requests from Google Assistant and outputs a msg for each new conversation. Behind the scenes, it is keeping track of each conversation as it flows through Node Red and sends subsequent requests from Google Assistant to the appropriate Ask node.

ask nodes prompt the user for input and represent a turn in a conversation. There are a range of prompt types available including Simple,Simple Selection, Date and Time, Confirmation, Address, Name and Location. The more complex ones are handled by Google Assistant - for example for Address the user can respond "McDonald's" and Google Assistant will automatically list all the nearby McDonald's for the user to select from, with the street address of the selection being returned to Node Red. The Ask has two outputs - first one is for the user's response, the second is for when the user cancels the conversation or doesn't respond before the timeout.

tell nodes tell the user something and terminate the conversation. All conversation paths should end with a Tell node, even ones that have been cancelled or timed out.

The Google Action nodes works with all Google Home and Assistant devices such as speakers, smart phones and hubs.

I really like the way this version allows you to integrate a conversation with a Node Red flow - the user conversation becomes part of the flow, rather than the flow being about handling the conversation.

Anyhow, have a play with it and give me any feedback.

Dean

For the last few days I have been looking into google actions as well. Could you please provide a node that instead of an HTTP endpoint it could just accept a payload via input from another source?

For the last few days I have been looking into google actions as well. Could you please provide a node that instead of an HTTP endpoint it could just accept a payload via input from another source?