Thanks a lot for the suggestions. Regarding them:

Regarding the loops, the application is a finite state machine. I need to loop back to feed the status into the machine. It is true that I could have an inject node going at intervals, but the main point of looping back is that I can control the timing of the loop.

For instance, when the AGV is offline I loop back after 5 seconds, when idle but not in a known position, I loop back after 3 seconds, but when docking, for instance, since I have to check the status for some sensors in the machine side, the loop is happening after one second.

Those delays are controlled by the trigger nodes in the transition subflow. Only one branch is active each iteration, and the trigger node only sends one reply, so it won't loop back unless the flow has finished doing its job.

Another option would be to have an inject node checking for a flag on each AGV object, then if the flag is on, start the flow, reset the flag, download the next state from the global variables, insert it into the FMS, and after it has processed the transition, set the next state and the flag again. This might work, but it is basically the same as a loop.

The problem with this approach is that I would need an inject node with a relatively short timing, like 500ms, to catch the flags when they come up, or I could miss some of them. Either case, it looks like checking the global variables so often would generate more CPU usage than just looping back when the node is finished and sends one message.

Regarding the global.get and global.set flags, you are right. I think I could download the current state as an object at the beginning of the transition state, work on it, then upload it at the end of the transition.I had a lot of problems with the old one, because after using a GET or POST would lose my payload and configuration info. In the new version, I use this message.agv to store the name of the AGV i refer to, and this allows me to download all the information from the global variables. I guess I can also store whatever I need outside of the payload, and that would not be affected by the node.

Regarding the missing variable: That is the from the old flow. I guess I missed that one on the new. Dangers of copy-pasting.

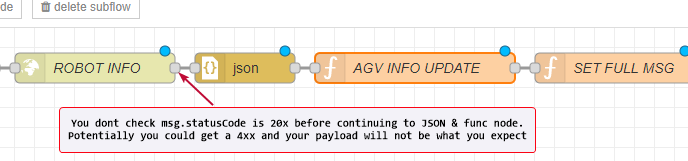

Checking the status code: That was on the to-do list, but not a critical issue. The robot server is dedicated for the AGVs fleet manager and nothing else, and unless the server is down (in which case nothing will work in the factory, because it means the server cluster is down), it will always reply.

If the robot is down, it will reply with a REGISTERED_OFFLINE status, which I already handle as an exception. If it's online, I'll have all the normal data. I can always throw in a switch node to interrupt the flow unless the statuscode is 20x, and maybe generate an error code in the global variables.