OK, tried the batch node using this flow

[{"id":"262a3923.e7b216","type":"influxdb in","z":"e34b3a780748064f","influxdb":"eeb221fb.ab27f","name":"","query":"SELECT last(\"HTFN1Z1T\") FROM \"stations\" WHERE time >= 1609480800000ms and time <= 1610773199000ms GROUP BY time(1m) fill(null)","rawOutput":false,"precision":"ms","retentionPolicy":"","org":"my-org","x":340,"y":280,"wires":[["467fc95d165f998e"]]},{"id":"803d82f.ff80f8","type":"inject","z":"e34b3a780748064f","name":"","repeat":"","crontab":"","once":false,"onceDelay":0.1,"topic":"","payload":"","payloadType":"date","x":240,"y":200,"wires":[["262a3923.e7b216"]]},{"id":"467fc95d165f998e","type":"split","z":"e34b3a780748064f","name":"","splt":"\\n","spltType":"str","arraySplt":1,"arraySpltType":"len","stream":false,"addname":"","x":450,"y":360,"wires":[["14f2646498b1cb03"]]},{"id":"14f2646498b1cb03","type":"function","z":"e34b3a780748064f","name":"","func":"msg.payload = [\n {\n measurement:\"SingleRotaryFurnaceZoneData\",\n fields: {\n temperature: msg.payload.last\n },\n tags:{\n MeasType:\"actual\",\n EquipNumber:\"1\",\n EquipZone:\"zone1\"\n },\n timestamp: msg.payload.time\n }\n];\nreturn msg;","outputs":1,"noerr":0,"initialize":"","finalize":"","libs":[],"x":580,"y":480,"wires":[["31fda5cb865c09ab"]]},{"id":"31fda5cb865c09ab","type":"influxdb batch","z":"e34b3a780748064f","influxdb":"e55f0c8e.1659f","precision":"","retentionPolicy":"","name":"","database":"database","precisionV18FluxV20":"ms","retentionPolicyV18Flux":"","org":"heattreat","bucket":"junkbucket","x":830,"y":520,"wires":[]},{"id":"eeb221fb.ab27f","type":"influxdb","hostname":"172.31.19.130","port":"8086","protocol":"http","database":"DST","name":"test","usetls":false,"tls":"d50d0c9f.31e858","influxdbVersion":"1.x","url":"http://localhost:8086","rejectUnauthorized":true},{"id":"e55f0c8e.1659f","type":"influxdb","hostname":"3.17.31.71","port":"8086","protocol":"http","database":"DST","name":"","usetls":false,"tls":"","influxdbVersion":"2.0","url":"http://192.168.10.25:8086","rejectUnauthorized":false},{"id":"d50d0c9f.31e858","type":"tls-config","name":"","cert":"","key":"","ca":"","certname":"","keyname":"","caname":"","servername":"","verifyservercert":false}]

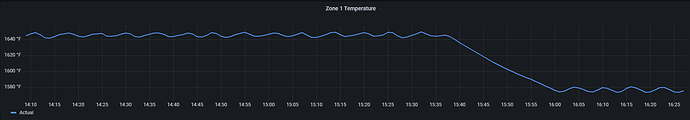

and got the same ENOBUFS error. I changed the timespan down to 15 days (21600 values) and got the same error. Moved it down to 5 days (7200 values) and it worked.

I think I understand the concept that I am sending too many values at a time. Perhaps a loop or something that sends 5 days at a time?