Hello all,

I'm really new to node-red and programming so maybe this topic could sound dumb. There is a dynamic website that publish every 5 minutes values in a table adding always a new row to it. I want to be able to extract the last row of data when ever it is required, to later on do mathematical calculations with the values, but, I'm unable to extract the data. I have not had this issue with static web pages since scrapping with the html request node has been relatively easy.

The web page is Seguimiento de la demanda de energía eléctrica , sorry that the page is in Spanish.

Thanks in advance.

There is no need to web scrape & and as you have found it doesnt work anyway.

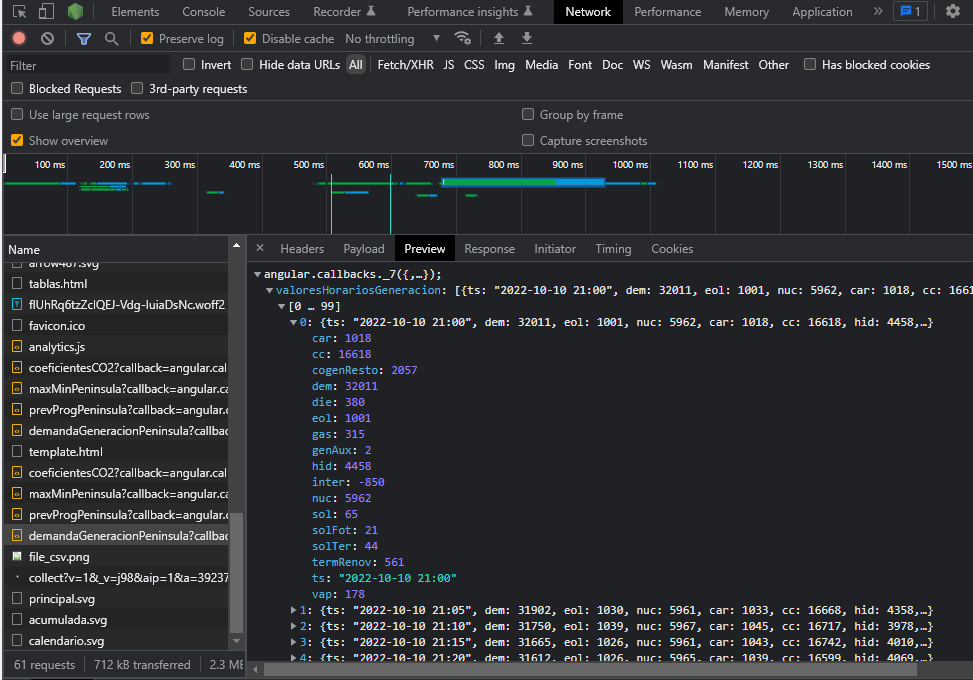

That is because a dynamic page gets its data after loading the "page" by using ajax.

Open your browsers devtools and watch the network tab - you will see the requests for the data are returned in JSON format.

Locate the URL with data of interest & use HTTP Request for that (be sure to set it to parse JSON reply)

Thanks a lot Steve,

This helps me a lot not only for this specific work but for myself in understanding this dynamic pages, but I'm still having one issue, once I try the http request on node-red even specifying the JSON object parse return an error shows, saying "JSON parse error", and then it send the information back as a string, after that I tried adding a JSON parser node just in case and another error pops up "Unexpected token a in JSON at position 0".

The URL where the data is stored is basically the same one you showed in your image, just in case this is the URL https://demanda.ree.es/WSvisionaMovilesPeninsulaRest/resources/demandaGeneracionPeninsula?callback=angular.callbacks._3&curva=NACIONAL&fecha=2022-10-11

Can you capture the payload coming out of the http node/debug16 (using the "copy value button" that appears under your mouse when you hover over the payload in the debug view)

Then paste what you've copied into a response using the code block button

Edit...

Ignore that. I see the data in that link

2 mins...

Here you go...

[{"id":"baccbef424914807","type":"inject","z":"4c5ad8c7caa80822","name":"","props":[{"p":"payload"},{"p":"topic","vt":"str"}],"repeat":"","crontab":"","once":false,"onceDelay":0.1,"topic":"","payload":"","payloadType":"date","x":2180,"y":1040,"wires":[["0992ade99ca162e2"]]},{"id":"0992ade99ca162e2","type":"http request","z":"4c5ad8c7caa80822","name":"","method":"GET","ret":"txt","paytoqs":"ignore","url":"https://demanda.ree.es/WSvisionaMovilesPeninsulaRest/resources/demandaGeneracionPeninsula?callback=angular.callbacks._3&curva=NACIONAL&fecha=2022-10-11","tls":"","persist":false,"proxy":"","insecureHTTPParser":false,"authType":"","senderr":false,"headers":[{"keyType":"Accept","keyValue":"","valueType":"application/json","valueValue":""}],"x":2330,"y":1040,"wires":[["dd5d18f3f267cf8c"]]},{"id":"dd5d18f3f267cf8c","type":"function","z":"4c5ad8c7caa80822","name":"clean up response","func":"const firstCurly = msg.payload.indexOf('{')\nconst lastCurly = msg.payload.lastIndexOf('}')\nconst json = msg.payload.slice(firstCurly, lastCurly+1)\nmsg.payload = json\nreturn msg;","outputs":1,"noerr":0,"initialize":"","finalize":"","libs":[],"x":2210,"y":1100,"wires":[["4d59de1a7b029e99"]]},{"id":"4d59de1a7b029e99","type":"json","z":"4c5ad8c7caa80822","name":"","property":"payload","action":"","pretty":false,"x":2390,"y":1100,"wires":[["82504265a55fd419"]]},{"id":"82504265a55fd419","type":"debug","z":"4c5ad8c7caa80822","name":"debug 106","active":true,"tosidebar":true,"console":true,"tostatus":false,"complete":"payload","targetType":"msg","statusVal":"","statusType":"auto","x":2370,"y":1160,"wires":[]}]

You are a genius Steve thanks a lot. Hope that one day I'm able to get close to your knowledge.

This topic was automatically closed 14 days after the last reply. New replies are no longer allowed.