AFTER EVEN MORE DIGGING AND STUFF.

This is weird.

I send in two messages - and as suggested: we'll ignore the _msgid part - to the TTS node.

SOMETIMES it sends out TWO messages.

SOMETIMES it sens out ONE message with two parts.

eg:

(Two parts)

(and gee it would be nicer if it came out structured.)

{"passThroughMessage":{"_msgid":"33144809f7e01833","payload":"Inside temperature 21 degrees","topic":"weather","_event":"node:b93ca2a3e5a8db14","location":{"lon":151.1314,"lat":-33.8886,"city":"Ashfield","country":"AU"},"data":{"coord":{"lon":151.1314,"lat":-33.8886},"weather":[{"id":800,"main":"Clear","description":"clear sky","icon":"01d"}],"base":"stations","main":{"temp":297.82,"feels_like":29,"temp_min":296.9,"temp_max":299.1,"pressure":1015,"humidity":44,"sea_level":1015,"grnd_level":1007},"visibility":10000,"wind":{"speed":9.26,"deg":350},"clouds":{"all":0},"dt":1757986613,"sys":{"type":2,"id":2010638,"country":"AU","sunrise":1757966036,"sunset":1758008834},"timezone":36000,"id":7839683,"name":"Ashfield","cod":200},"time":"11:36","title":"Current Weather Information","description":"Current weather information at coordinates: -33.8886, 151.1314","it":21,"windspeed":9,"settings":{"input":"2025-09-16T01:36:54.020Z","input_format":"","input_tz":"Australia/Sydney","output_format":"HH:mm","output_locale":"en-AU","output_tz":"Australia/Sydney"},"parts":{"index":2,"total":2}},"payload":true,"filesArray":[{"file":"/home/pi/.node-red/sonospollyttsstorage/ttsfiles/210d77a1976da9236fca96c4f1d37086.mp3"},{"file":"/home/pi/.node-red/sonospollyttsstorage/ttsfiles/a94cc63b3683a98297bd0e383bd11d39.mp3"}],"_msgid":"e9c5c07769aa0291","_event":"node:b39c5f7d5fddc27e","alert":null,"postalert":null,"mute":null,"parts":{"index":2,"total":2}}

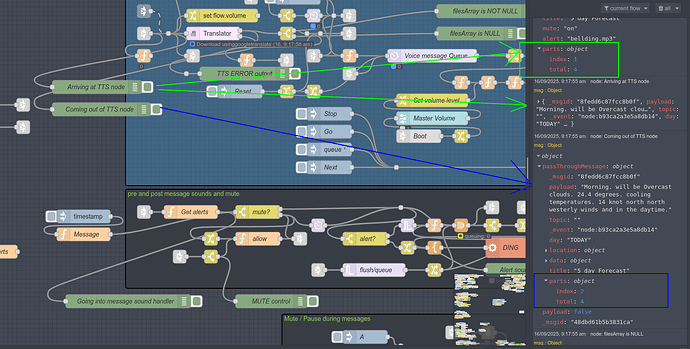

For the sake of attempting to help here is a screen shot.

Note the fileArray part near the top.

Here is another example, same message sent, and it is in two parts.

I can't find part 1 of the two, but I fear that is because it is being filtered further back by a filter node.

Anyway, the screen shot.

Note how fileArrays has only 1 object in it.

The previous one had 2.

(Hurray, I did actually catch one of these rogue messages)

{"passThroughMessage":{"_msgid":"16b4b714f58e4983","payload":"Inside temperature 21 degrees","topic":"weather","_event":"node:b93ca2a3e5a8db14","location":{"lon":151.1314,"lat":-33.8886,"city":"Ashfield","country":"AU"},"data":{"coord":{"lon":151.1314,"lat":-33.8886},"weather":[{"id":800,"main":"Clear","description":"clear sky","icon":"01d"}],"base":"stations","main":{"temp":297.72,"feels_like":29,"temp_min":297.09,"temp_max":299.18,"pressure":1015,"humidity":44,"sea_level":1015,"grnd_level":1007},"visibility":10000,"wind":{"speed":9.77,"deg":340},"clouds":{"all":0},"dt":1757987563,"sys":{"type":2,"id":2010638,"country":"AU","sunrise":1757966036,"sunset":1758008834},"timezone":36000,"id":7839683,"name":"Ashfield","cod":200},"time":"12:01","title":"Current Weather Information","description":"Current weather information at coordinates: -33.8886, 151.1314","it":21,"windspeed":10,"settings":{"input":"2025-09-16T02:01:11.723Z","input_format":"","input_tz":"Australia/Sydney","output_format":"HH:mm","output_locale":"en-AU","output_tz":"Australia/Sydney"},"parts":{"index":2,"total":2}},"payload":false,"_msgid":"1bf32398099e7a50"}

(screen shot)

Although it is part 2 of 2 also.

Any thoughts / ideas on what's going on and how to work around it?